参考:

https://github.com/QwenLM/Qwen-7B

https://github.com/QwenLM/Qwen-VL

https://huggingface.co/Qwen

大模型国内下载:https://modelscope.cn/

或者huggingface镜像网站:https://hf-mirror.com/

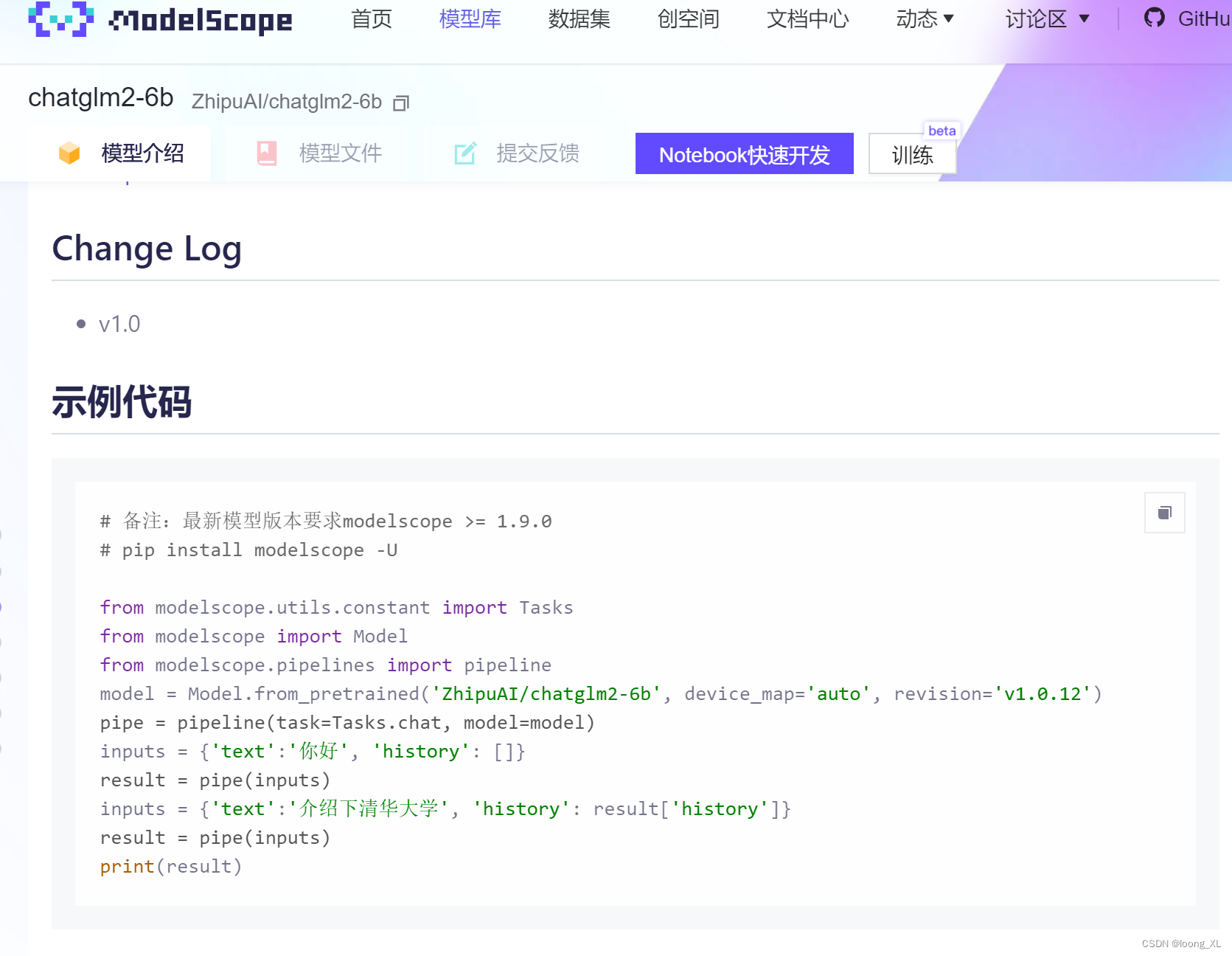

from modelscope import Model

##默认下载本地 .cache/modelscope/hub 下

model = Model.from_pretrained('ZhipuAI/chatglm2-6b', device_map='auto', revision='v1.0.12')

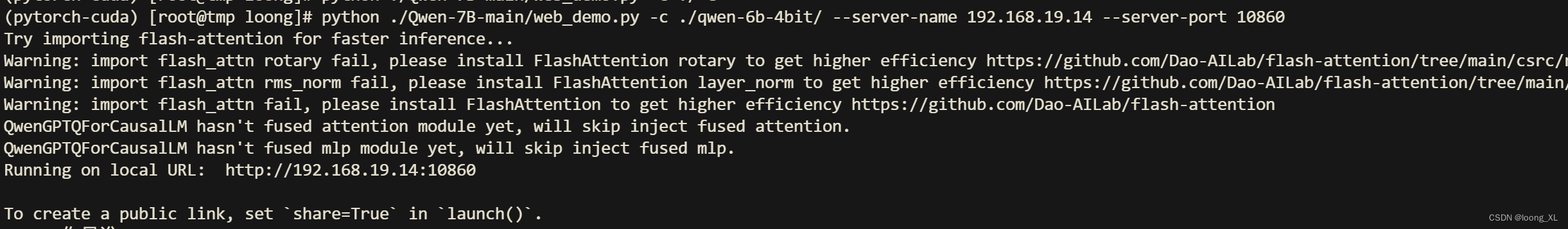

下载好代码与模型后运行:

python ./Qwen-7B-main/web_demo.py -c ./qwen-6b-4bit/ --server-name 0.0.0.0 --server-port 10860

下载好代码与模型后运行:

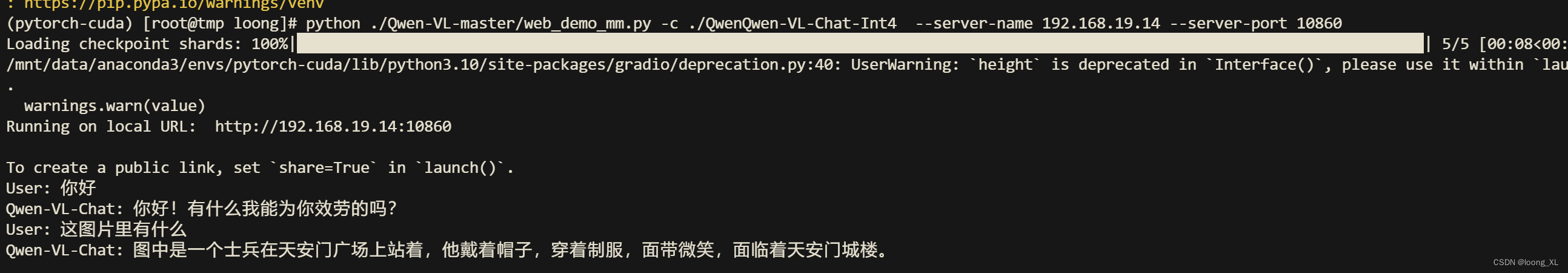

运行QwenQwen-VL-Chat-Int4 大概需要15G显卡:

(运行报错基本可以对应升级包的库即可 accelerate、peft等)

python ./Qwen-VL-master/web_demo_mm.py -c ./QwenQwen-VL-Chat-Int4 --server-name 0.0.0.0 --server-port 10860

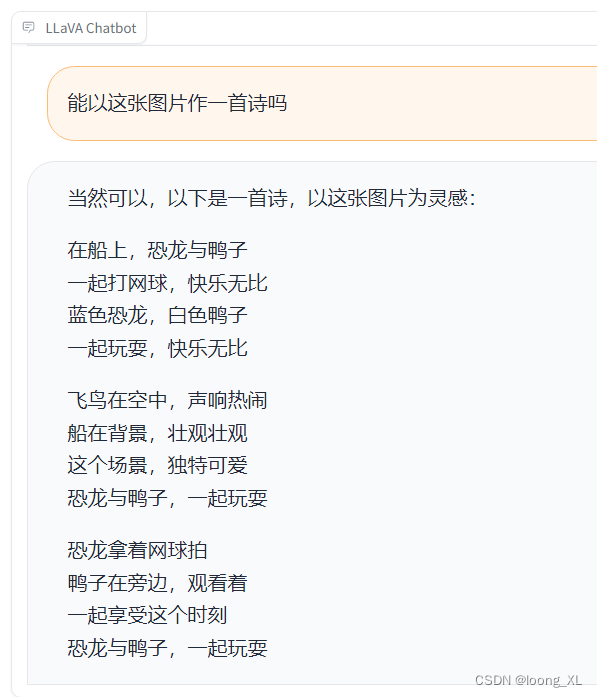

效果也好

参考:https://blog.csdn.net/weixin_42357472/article/details/132664224

微信扫码

微信扫码

QQ扫码

QQ扫码

您的IP:10.1.68.145,2026-02-15 09:04:43,Processed in 0.05257 second(s).